Why (Open Source) DeepSeek's shock is important

How a Chinese startup is challenging AI giants with open-source innovation, achieving ChatGPT-level performance at 2% of the cost. Is this the future of accessible AI?

I, as an AI enthusiast, have been watching the AI industry evolve for a while now, and something fascinating happened yesterday. It's like watching a David vs. Goliath story unfold, but with a twist that could reshape the entire AI landscape. The protagonist? DeepSeek, a relatively new player that's causing quite a shock at the moment in the world of artificial intelligence. Or probably it's just hype, but nonetheless, there's something that one can learn out of it.

You know what's really interesting? In my opinion, it's not just about DeepSeek's performance (though that's impressive too). It's about the idea it represent: a shift in how we think about AI development and accessibility. While giants like OpenAI and Google are building their walled gardens with increasingly expensive User and API costs (Yeah, I'm talking about the $200 plan!), DeepSeek is showing us there's another way.

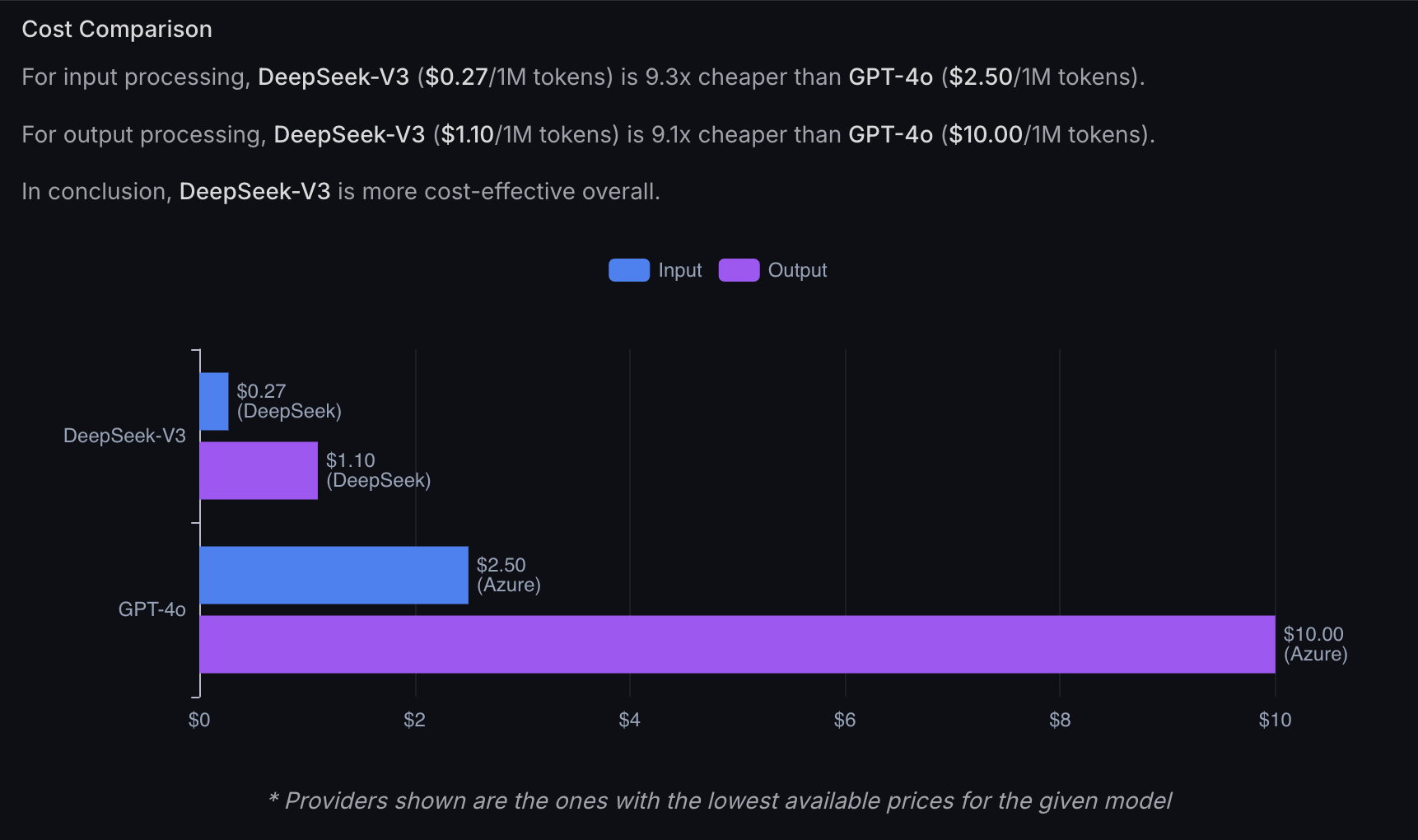

Here's the kicker: DeepSeek's models (V3 in this case) are not only performing remarkably well, but they're doing it at a fraction of the cost. I'm talking about API costs that make OpenAI's GPT-4 look like a luxury service. But more importantly, they're open-sourcing their model. Think about that for a moment - they're essentially giving away the blueprint for building advanced AI systems.

Now I'm aware that there are lots of open source models out there, some of them I use for my personal stuff, but none of them, at least all those I've tried so far, would achieve a performance like GPT4 or Sonnet 3.5, yet alone o1, and the fact that there's a model with nearly almost the same performance (one might argue it's even better) AND it's open source, changes the course!

Both Approaches

Remember when the internet was truly open? When you could build whatever you wanted without worrying about platform restrictions (IaaS as an example) or API costs? The AI industry is at a similar crossroads right now. On one side, we have the big tech approach: proprietary models, expensive APIs, and controlled access. On the other, we have the open-source movement, and players like DeepSeek, that (might) believe in democratising AI technology.

The contrast couldn't be clearer. While companies like OpenAI charge premium rates for their API access, DeepSeek is showing that high-quality AI can be both accessible and affordable. It's not just about cost savings - it's about freedom to innovate, to experiment, and to build without constraints.

The Hidden Cost of Proprietary AI

Let me put this in perspective: OpenAI just secured $6.6 billion in funding, pushing their valuation to a staggering $157 billion. They're even planning a joint venture called "Stargate" with Oracle and SoftBank, committing up to $500 billion over the next four years for AI infrastructure. That's the kind of money I'm talking about in the proprietary AI world.

But here's where it gets interesting: DeepSeek, a Chinese AI startup backed by the $8 billion hedge fund High-Flyer, developed their R1 model with just about $5.6 million and 2,048 Nvidia H800 GPUs. And guess what? They're achieving performance comparable to ChatGPT. It's like watching someone build a Ferrari competitor in their garage - and succeeding.

Why Open Source Matters More Than Ever

DeepSeek's approach is important and effective not just because of the cost savings, but because of how they're doing it. They've developed something called multi-head latent attention (MLA) and a sparse mixture-of-experts architecture (DeepSeekMoE) that drastically reduces inference costs. While other companies are building walls, DeepSeek is opening doors.

Let me break down what makes their approach so special in my opinion:

- They use reinforcement learning without supervised fine-tuning, meaning they don't need expensive human-labeled data

- Their MoE architecture only activates relevant parts of its roughly 670 billion parameters for each task (imagine having a huge team but only calling in the experts you need for each project)

- They offer scaled-down versions from 1.5 billion to 70 billion parameters that can run on consumer devices

- Everything is open source under an MIT license

Now, let's talk numbers - because they're mind-blowing!

While ChatGPT reportedly needs 20,000 high-end GPUs, DeepSeek gets similar results with just 2,000 (To put that into perspective, I think Meta needed something like 31M hours for models that size). Their API costs $0.14 per million tokens compared to OpenAI's $7.50 o1 - that's a 98% difference! They even offer 50 free daily messages on their chat platform.

How I see the future

I believe we're at a critical juncture in AI development. The choices we make now about how AI technology is developed, shared, and controlled will have far-reaching consequences. DeepSeek's success isn't just a victory for one company - it's a proof of concept that open-source AI can compete with, and sometimes outperform, proprietary solutions.

The question isn't whether open-source AI will play a role in the future - it's how big that role will be. And companies like DeepSeek are showing us that the answer might be "bigger than we thought."

As someone who's been following AI development, I can't help but feel optimistic about what DeepSeek represents. The real revolution in AI might not come from the biggest companies with the most resources. It might come from the open-source community, powered by players like DeepSeek that are willing to share their advances with the world. And that's a future worth getting excited about.

Cheers

Comments